As the influence of artificial intelligence (AI) on media content grows, parents and guardians will be faced with new challenges in keeping their children safe in the digital world. To ensure the online environment is safer for younger users, The Mannerheim League for Child Welfare is demanding that social media companies implement clear labelling for all AI-generated content.

Artificial intelligence, or AI, is proving to be somewhat divisive; some see it as a giant leap forward and a tool that can help humanity, while others seem worried it will do more harm than good.

On the 5th of June, YouGov published an article written by Matthew Smith¹, Head of Data Journalism, and the piece opened with the words, “Concerns for an AI apocalypse rise in last year”, followed by “There has been a 10pt increase in the number who see artificial intelligence as a top threat to human survival.”

There will be those who will argue that a broad-reaching technology such as AI takes time to be implemented, and once it has been, people will see its full benefits. However, it would appear from research in the article that there is a long way to go before the majority will be convinced.

The general populace is not alone in their concerns; some of the world’s leading minds working in the technology sector are also worried. For example, a CNBC article³ twice mentioned Elon Musk’s stark words at the World Government Summit in Dubai, United Arab Emirates, “One of the biggest risks to the future of civilisation is AI.”

Irrespective of what one’s view is on artificial intelligence and AI-generated content, it is already here, and people of every age will find it difficult to avoid its reach. It’s why organisations such as The Mannerheim League for Child Welfare are demanding sufficient safeguards, such as clear warnings, be implemented now.

Europol predicts that by 2026, AI could be responsible for creating up to 90% of all media content². This escalating reality and existing online threats emphasise the need for effective and prompt parental guidance in children’s media use and labelling.

Artificial intelligence has a significant impact on children’s media use, as algorithms determine what videos children watch, what news they see, and what kind of content they consume. With AI, the line between reality and imagination has blurred. Even adults find it nigh-impossible to recognise the outputs of artificial intelligence, and for children, the situation is even more challenging.

In addition, artificial intelligence can already be seen as a tool for bullying, particularly through the manipulation of images and videos. Social media companies play a crucial role in mitigating risks and ensuring the safety of children online.

“We need an identifier to clarify whether the content is reality-based or AI-produced, much like the Parental Advisory label helps identify content harmful to children. This becomes even more important as children’s media literacy struggles to keep up with the rise of AI,” says Paula Aalto, head of school cooperation and digital youth work at The Mannerheim League for Child Welfare.

Eye-opening film calls for action

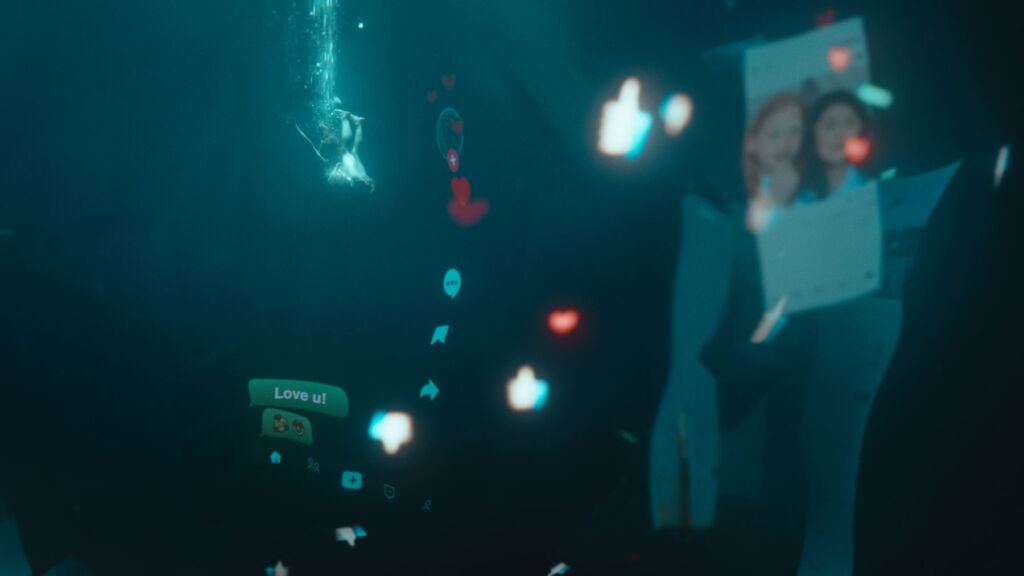

The Mannerheim League for Child Welfare has released a powerful short film demonstrating the dangers of unsupervised media consumption by children and highlighting the need for clear labelling of AI-generated content.

The film “The AI Abyss” is a plunge into the uncharted waters of AI in the media. It parallels MLL’s long-standing tradition of empowering children, dating back to their initiatives of teaching children to swim 50 years ago. Today, the challenge lies not in water but in the media stream, where AI increasingly blurs the line between reality and fiction.

Final thoughts

What The Mannerheim League for Child Welfare is demanding from social media companies is the right thing to do. However, the worry is that if one of the AI pioneers and the world’s richest person is seemingly unable to alter its direction, others will undoubtedly encounter similar problems.

The demand for clear labelling of AI-generated online content to protect children is a direct appeal to social media companies’ better nature; however, as history has shown, what is the right thing to do and the generation of profit are often in conflict with each other, and at the end of the day, many will believe that it will always be that profit ultimately triumphs.

Over the forthcoming years, AI-generated content will become a bigger factor in people’s lives. Although many like to think that the driving force behind it is the betterment of humanity and the purpose of entertainment, I am caught in two minds. On the one hand, I want to believe that it can be a tool for good, but another part of me feels that an equally significant driving force is the generation of money, and I know what my money will be on.

References:

- ¹https://yougov.co.uk/topics/technology/articles-reports/2023/06/05/concerns-ai-apocalypse-rise-last-year

- ²https://www.europol.europa.eu/cms/sites/default/files/documents/Europol_Innovation_Lab_Facing_Reality_Law_Enforcement_And_The_Challenge_Of_Deepfakes.pdf

- ³https://www.cnbc.com/2023/02/15/elon-musk-co-founder-of-chatgpt-creator-openai-warns-of-ai-society-risk.html

To read more general lifestyle news and features, click here.

![]()

You must be logged in to post a comment.